However, the major hindrance in these view synthesis approaches is the hardware complexity. Present view synthesis methods employ either image-based rendering or implicit neural representation to develop the 3D view. Human body view synthesis is one of the challenging problems, especially the human body, which is in motion.

Novel view synthesis is the process of generating a 3D view, or sometimes 3D scene, with the available 2D images captured from different poses, orientations and illuminations.

#Convert 2d to 3d online image movie#

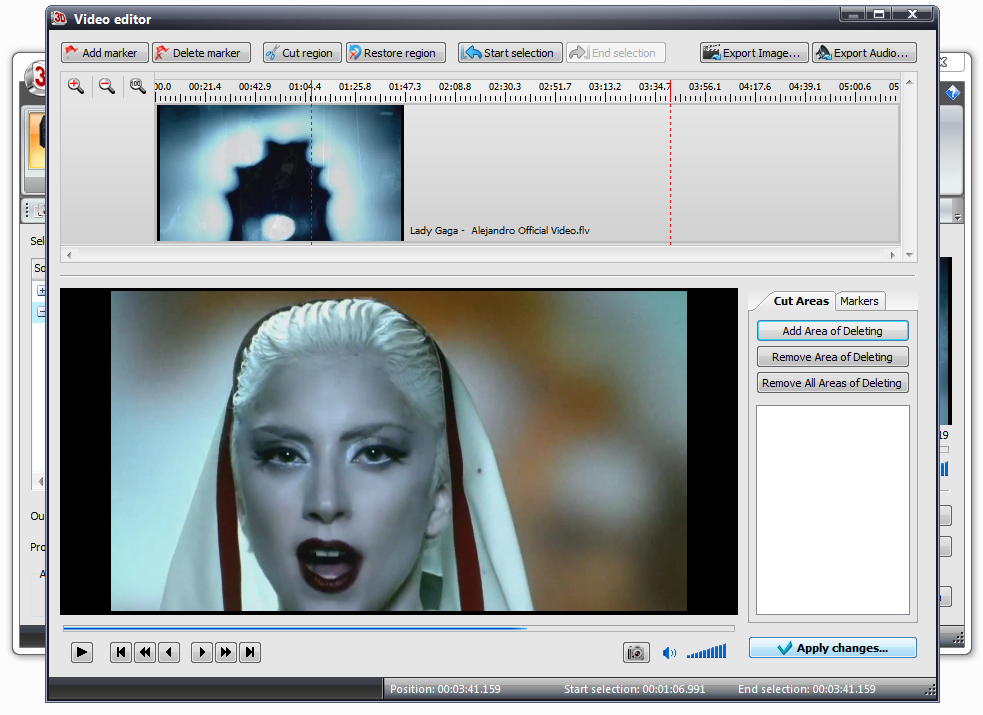

16-22, June 2012.Novel view synthesis finds interesting applications in movie production, sports broadcasting and telepresence. Ishwar, “2D-to-3D image conversion by learning depth from examples,” in 3D Cinematography Workshop (3DCINE’12) at CVPR’12, pp. (Video recording of the presentation is shown at the top of this page.)

SPIE Stereoscopic Displays and Applications, vol. Mukherjee, “Automatic 2D-to-3D image conversion using 3D examples from the Internet,” in Proc. Rowley, “Image saliency: From intrinsic to extrinsic context,” in Proc. With the continuously increasing amount of 3D data on-line and with the rapidly growing computing power in the cloud, the proposed framework seems a promising alternative to operator-assisted 2D-to-3D conversion.īelow are shown three example of automatic 2D-to-3D image conversion from YouTube 3D videos as anaglyph images. While far from perfect, the presented results demonstrate that on-line repositories of 3D content can be used for effective 2D-to-3D image conversion. Then, we repeated the experiments on a smaller NYU Kinect dataset of indoor scenes. First, we used YouTube 3D videos in search of the most similar frames. Results: We have applied the above method in two scenarios. 2D query (left), median depth field computed from top 20 photometric matches on YouTube 3D (center), and depth field after post-processing using a cross-bilateral filter (right). We apply the resulting median depth/disparity field to the 2D query to obtain the corresponding right image, while handling occlusions and newly-exposed areas in the usual way. Since depth/disparity fields of the best photometric matches to the query differ due to differences in underlying image content, level of noise, distortions, etc., we combine them by using the median and post-process the result using cross-bilateral filtering. In the case of NYU dataset, however, depth fields are provided since all images have been captured by a Kinect camera indoors. If the 3D images are provided in the form of stereopairs, this necessitates disparity/depth estimation.

In our approach, we first find a number of on-line 3D images that are close photometric matches to the 2D query and then we extract depth information from these 3D images. The key assumption is that two 3D images (e.g., stereopairs) whose left images are photometrically similar are likely to have similar depth fields. The key observation is that among millions of 3D images available on-line, there likely exist many whose 3D content matches that of the 2D input (query). Our novel approach is built upon a key observation and an assumption. Instead of relying on a deterministic scene model for the input 2D image, we propose to “learn” the model from a large dictionary of 3D images, such as YouTube 3D or the NYU Kinect dataset. Summary: In this project, we explore a radically different approach inspired by our work on saliency detection in images. In practice, such methods have not achieved the same level of quality as the semi-automatic methods.

Although such methods may work well in some cases, in general it is very difficult, if not impossible, to construct a deterministic scene model that covers all possible background and foreground combinations. Fully-automatic methods, typically make strong assumptions about the 3D scene, for example faster or larger objects are assumed to be closer to the viewer, higher frequency of texture belongs to objects located further away from the viewer, etc. Konrad entitled “Automatic 2D-to-3D image conversion using 3D examples from the Internet” at SPIE Stereoscopic Displays and Applications Symposium, January 2012, San Francisco:īackground: Among 2D-to-3D image conversion methods, those involving human operators have been most successful but also time-consuming and costly.

0 kommentar(er)

0 kommentar(er)